The analyses, opinions and findings in this article represent the views of the authors and should not be interpreted as an official position of their employers. This is an informal think-piece, which is written as a point of interest. It is not intended to be an official statement of their employer’s policy or thinking.

Let's Begin!!

The new decade started with three important developments that have underscored the central role that communication technology has in all areas of work and play.

- During the COVID-19 pandemic, which has severely restricted the interactions in the physical world, communication technology was the quintessential tool that kept society afloat. More than half a century ago, the Internet started as an idea for a resilient communication infrastructure that can survive the global nuclear disaster. Fortunately, the very same digital architecture is immune to viruses and it has shown its strength, usefulness, and resilience in the pandemic.

- The level at which 5G is present in the news and discussed in the public is unprecedented, not seen in the previous generations of wireless technology. This popularity, unfortunately, does not come due to a surge of interest in the general population about modulation, beamforming, or clever protocol design. It comes instead due to the health concerns about 5G, primarily rooted in conspirative allegations that 5G has helped to spread the COVID-19 virus. We have even witnessed several cases in which base station towers have been burned down.

- The level at which 5G is present in geopolitics is also unprecedented. With the ambition of 5G to connect various vertical industries and billions of things, there is an increased awareness about the criticality of the communication infrastructure. The question about who can build and operate a wireless mobile infrastructure became one of the hot topics in international politics.

The theme of this article is not elaboration on these three issues, they are here merely to put the context for showing the high societal impact that communication technology has in 2020. The present text can be seen as a followup to the two articles [1] and [2] on communication in the new decade written previously by two of the authors. Here we attempt to provide a broad perspective on hopes, challenges, and fears of communication technology in the coming decade. It covers a wide range of aspects, starting from applications and user experience, through the new economy in building and operating networks, potential security issues, and, last but not least, ways to strengthen the scientific methodology. We have put these aspects into three different groups:

- Long overdue. Connectivity solutions that were supposed to be delivered some time ago, but for some reason, they are still on the to-do list.

- Unavoidable trends. This involves the ever-present AI/ML, increased focus on privacy and security, and changes in the wireless architectures.

- Revolution in waiting. Technologies that have the potential to significantly shake the “linear” evolution of communication technology.

Long Overdue

Remote Presence

When [1] was written back in January 2020, the first topic in the list for the new decade was remote presence and the motivation was to replace the need for work-related traveling in order to reduce CO2 emissions. One could not imagine that this would become the most pressing technology question in the weeks to come, when the massive societal lockdowns transferred all work activities online. The online experience has shown that the current communication infrastructure can offer a lot, but we are still far away from a truly immersive remote presence.

Let us at first take reliability. Regardless of the online platform used, the participants regularly use application-layer control information to check the connection status (“Can everybody hear me?”, “Sorry, I lost my connection”, etc.). A simple indicator that we have reached a milestone in remote presence would be the absence of the questions noted above, as it would mean that failures occur with negligible probability. Nevertheless, this is not easy to guarantee, as a platform for online communication can be seen as a stack of different modules (modems, drivers, application software, etc.), each provided possibly by a different supplier and the reliability of the connection is, roughly, a product of the reliability of different modules. This makes it difficult to attain overall high reliability. A step in the right direction is network slicing, which isolates a connection from the other connections to achieve its requirements. The mechanism of slicing should be extended to the overall end-to-end connectivity, including the human-computer interface.

Next is the latency. 5G has put a focus on the ultra-reliable low latency communication (URLLC), where high reliability should be achieved under very stringent latency constraints. However, it is mostly focused on the latency of the wireless link, but each of the aforementioned modules introduces its own, likely stochastic, latency. Understanding the end-to-end latency and finding methods to mitigate high delays and thus preserve the impression of reliability is another fundamental building block. Nevertheless, it would need to go beyond the current latency-reliability characterization of a link [3] and encompass other important contributors to latency, such as, for example, video encoding latency.

Finally, building technology for sufficiently good remote presence experience at a massive scale is a multidisciplinary effort, which, in addition to communication, includes areas such as signal processing, human-computer interface, artificial intelligence, and others. We expect to see significant demand in the coming years for delivering a reliable immersive experience of remote presence. This is supported by the fact that many businesses start to see work-from-home as a new normal and, over the long run, the work environment will consist of a mix of physical and remote presence.

Communicating in hard-to-reach places

While 4G is available in large parts of countries and operators have started to deploy 5G in densely populated areas, there is still a lot of work to do to provide people with the types of services they need, in all the places they need them. While in developed countries places with bad coverage are predominantly rural areas, in developing and under-developed countries there is a much lower reach of good mobile services. Going forward, there is the risk of a deeper coverage problem. For example, some services might require a shift to higher frequencies, with a lower range and an increased outdoor-to-indoor loss, which creates a wider problem for coverage within premises. The availability of new platforms for delivering safety services along the roads, will require the reach of a good mobile service not only along motorways and major roads, but also along minor roads. This may actually bring an extra safety problem: the drivers used to the assistance offered by the urban connectivity may need an extra effort to adapt to the rural areas with limited connectivity. Mobile services will play an important role in aerial mobile platforms, like drones, and thus a good mobile coverage will have to be provided on skies as well rather than only on lands. Another trend is the increased reliance on LEO satellites and their envisioned integration with terrestrial networks.

Solutions to these challenges are multidimensional, and involve a strong interaction among technology (e.g., enhanced coverage solutions), policy (e.g., coverage obligations), and market enablers (e.g., local operators or sharing agreements). From a technology perspective, which is the focus of this article, we note that 5G eMBB (enhanced Mobile BroadBand) has been primarily designed to address connectivity use cases in dense areas and thus has been (in our opinion) a missed opportunity to address coverage issues. In designing 6G, we have the opportunity to natively include technology solutions to address coverage. That includes solutions at the component level (e.g., rethinking massive antennas to target coverage, rather than capacity or in addition to it), at the architectural level (e.g., based on multihop or novel backhaul solutions), at the device level (e.g., designed to work for days without being recharged), and relying on the interactions between different types of networks (e.g., terrestrial and satellite networks or mobile and fixed networks).

Unavoidable trends

Machine Learning (ML) & Artificial Intelligence (AI)

Judging by the level of FOMO (Fear Of Missing Out) that ML and AI are creating, communication engineering is gradually evolving into some form of union between classical theory and the methods of ML/AI. But rather than throwing various powerful ML/AI techniques to communication problems, we need to take a step back and see what types of problems can be meaningfully addressed by ML/AI techniques. As elaborated in [4], ML techniques can be used to solve two general types of problems where we are facing either model deficit or algorithm deficit. We will argue for unavoidability of the use of ML/AI techniques by considering two exemplary aspects of the communication technology in the new decade, already touched upon in the text above.

Regarding model deficit, a good example where learning techniques must be used is the assurance of high connection reliability. We usually state the high reliability requirements by many 9s, such as “probability of successful transmission higher than 99.999%”. But a statistically correct question here would be: with respect to which distribution is this 99.999% measured? And how do we know this distribution? The proponents of model-based design would argue that it is much more reliable than using ML-based design, as the latter leads to unpredictable results. However, when we are talking about ultra-reliability, this reasoning is flawed. Even if we use a model-based design (e.g., a Rayleigh or Rician channel), the model has some parameters that need to be estimated (i.e., learned) when the wireless system is deployed in a given environment. There is also no guarantee that statistical models that are well established for describing the average behaviors are accurately describing the extreme cases that matter for extreme reliability. Hence, having elements of learning in a wireless system that aims to provide high reliability is unavoidable [5]. As the requirements for reliability continue to increase (recall here the remote presence), ML will become an integral part of the deployment of wireless communication systems, performance assurance, and accounting.

In terms of algorithm deficit, a good example is the support for services with heterogeneous requirements in the same system, which is the trademark of 5G. For example, designing an algorithm for allocating wireless resources to a large number of connections (some of which require high reliability, others rely on sporadic transmissions, etc.) is an extremely complex task, with a large number of parameters and unknowns. As such, it will likely be difficult to formulate it as a “clean” optimization problem, setting the stage of the use of ML/AI techniques. As the volume and heterogeneity of the connections will only increase in the years to come, this use of ML/AI techniques, along with the performance assurance (model deficit) will become indispensable.

More antennas & deeper hardware integration

Cellular base stations traditionally consist of many separate boxes, such as passive antennas, signal amplifiers, and baseband radio units. Some boxes are mounted in the tower, while others are deployed in a cabinet on the ground, which calls for much cabling. In 5G, these functionalities are all becoming integrated into a single tower-mounted box called an active antenna unit. This is a major step from a practical implementation perspective, even if it matches with what communication-theoretic researchers have been envisioned for decades. The higher level of hardware integration enables new features such as Massive MIMO (multiple-input multiple-output) [6], where the large number of antenna elements in the unit are individually controlled to steer the transmitted signals in different spatial directions and simultaneously transmitting multiple signals in different directions. When a single vendor is controlling both the hardware and software, a deeper level of optimization can be performed and new features can be introduced by upgrading the software. ML/AI can even be utilized to individually fine-tune the software at every base station, to learn the local traffic and propagation conditions. A first indication is the recent “dynamic spectrum sharing” feature where 4G equipment from the last few years can be simultaneously used for both 4G and 5G, thanks to software updates. Since the wireless data traffic grows with 30-40% per year, a substantial fraction can potentially be managed by yearly fine-tuning of the software. The Massive MIMO features that have been rolled out in 5G are still rudimentary compared to what is theoretically possible.

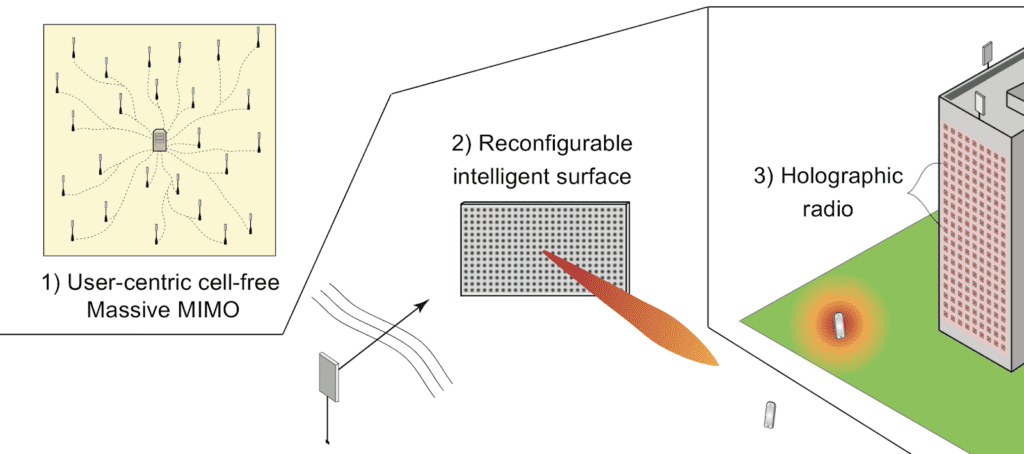

Another continuing trend is the denser deployments of antennas, which will continue beyond 5G. The base station towers used to be kilometers apart and now there are base stations deployed at every other rooftop in urban cities. Since we are running out of elevated places to erect antennas, new deployment paradigms are unavoidable. Three such trends are illustrated in Figure 2. The first option is to distribute the antenna elements over the coverage area instead of gathering them in boxes at discrete locations. This is called User-centric cell-free Massive MIMO [7] since it puts the user in the center: Instead of building networks where base stations are surrounded by connecting users, each user will be surrounded by antennas that jointly serve the user. These antennas can be integrated into cables and thereby “hidden” from the user, just as we are not thinking about the power cables that run along the walls in our home. One key benefit of such distributed antenna deployments is to reduce the variations in data speeds that the users experience at different places [8]; you are supposed to never experience having only “one bar”.

Wireless signals are scattered and reflected off objects along the way between the transmitter and receiver. If these behaviors can be tuned, an optimized pathway can be created. Such a smart wireless propagation environment can potentially be created by deploying reconfigurable intelligent surfaces [9], which helps the signals to find their way from the transmitter to the receiver. This is the second example in Figure 2 and, in a nutshell, it entails deploying thin surfaces made of metamaterial that are real-time configured to mimic the behavior of a curved mirror that captures signal energy and focuses it at a desired location. One can view it as a passive full-duplex relay that can be hidden behind a poster on the wall [10]. A strategic deployment is needed to help the radio signals to reach places where the existing objects are not taking them.

A third option is holographic radio [11], which is an attempt to build antenna arrays with a continuous aperture by integrating it with a limited number of baseband radio units. This approach takes inspiration from holographic imaging where the light from an object is recorded [6]. In this case, it is the way that radio waves travel from a transmitter to the array that is recorded, and later utilized for both transmit and receive beamforming. The vision is to build antenna arrays that are much larger than in current technology, while being thin and integratable into existing construction elements. Deployment on the facade of a building is shown in Figure 2.

Disaggregation, cloudification, and “openness”

Mobile networks are undergoing a revolution in the way they are designed, standardized, and deployed. On the one hand, disaggregation between network hardware and software is reminiscent of the shift from vertically integrated to horizontally integrated products that happened for personal computers during the early 80s. Apart from the features that must be carried out inside the antenna unit, radio and networks will be increasingly deployed as software functions that run on virtual machines or containers on top of general-purpose hardware (network disaggregation). Related to this, the boundaries between radio, network, and IT infrastructure are becoming very nuanced (network cloudification), in different parts of the value chain. At the same time, pushed on the supply side by the trends above and the demand side by the need of reducing expenditures, monetizing the network and increasing supply chain diversity, interfaces between the different radio and network elements are being opened, in different ways and at different layers (“openness”). Notably, on the radio-side, the “Open Radio” proponents build on disaggregation to standardize open interfaces between the different radio elements, thus aiming at achieving truly software-based multivendor deployments. While these trends are very much happening at present, they have massive implications for the future. First, in terms of skills: in a world where radio, network, and IT converge, what will be the skills and the professional profiles needed in the future? Second, in terms of the way mobile networks are designed. While the 5G core has been designed with a software-centric approach, 5G radio has been pretty much designed having in mind a traditional vertically integrated radio infrastructure. What would look like a radio design natively built having in mind trends like network disaggregation, cloudification, and openness? Third, in terms of standardization, how will the role of standardization bodies (that by some are perceived as sometimes leveraging on specifications and closed interfaces to raise barriers for innovation) change? Fourth, pushed by forces at the intersection between technology, market, and geopolitics, how will the network+IT value chain look like in the future? Finally, what are the implications in terms of trust, privacy, security, and resilience? We will touch on this latter aspect in the next section.

Trust, privacy, security, resilience

A possible way to examine the criticality of the upcoming wireless infrastructure (5G, WiFi 6, and a plethora of other IoT technologies) is to segment it into two parts: (1) resilience of the infrastructure and (2) protection and trustworthiness of the data exchanged over the communication systems. As 5G starts to deliver services in critical sectors and industry, these challenges are exacerbated and the threat surface is significantly increased.

The resilience of the communication infrastructure can be assured through diversity and redundancy. In this context, supply chain diversity means that the infrastructure is not dependent on a single vendor, but rather on a system of well-defined interfaces that can accept components from different vendors. There is nothing new in it—the Internet was built under that premise, but this diversification now comes to the wireless access layers, which were usually seen as a single black box within the global Internet. The role of redundancy is to overprovision the infrastructure in a way that, upon intentional or unintentional failure of some of its elements, it can still provide some communication service with degraded quality rather than totally disrupt the service. The trends of disaggregation, cloudification, and openness, discussed above, are essential for enabling diversity and redundancy.

Wireless standards are becoming increasingly more secure. For example, 3GPP has introduced from Release 15 various security enhancements (e.g., enhanced subscriber privacy, enhanced 5G and 4G core interworking security, unified access agnostic authentication, inter PLMN roaming security, etc.). Further improvements have been introduced in Release 16 to address the security requirements of so-called “vertical use cases”. However, there are other important aspects related to security in addition to the introduction of new standard features, including the architecture, the use of legacy devices, the impact of emerging deployment based on virtualization or containerization, and the use of open networks API. From a use-case perspective, the threat surface has also increased with wireless systems being used to provide services in sectors like automation, health, smart cities, and so on.

The issue of data protection and trustworthiness in part can be addressed by various cryptographic techniques and privacy-preserving algorithms. From the communication perspective, it is important to note that these techniques are, in general, increasing the amount of communication traffic that needs to be transferred through the network. Take, for example, blockchain, distributed ledgers, and smart contracts. The way they are used to ensure trustworthiness can be summarized as follows: they rely on networked communication to make it hard for anyone to produce a valid data content, accepted by the majority of all participating nodes. This increases the amount of communication much beyond what is needed for the actual data content. See, for example, [12] how the IoT traffic is changed when the trustworthiness of the IoT data relies on distributed ledger technology.

In general, the new traffic patterns generated by the techniques for ensuring trust, privacy, or security will fundamentally change the planning and optimization of communication systems, which should be factored in the requirements of the evolving systems.

Revolution in waiting

New Spectrum Models

Regardless of how bright or disruptive an idea in wireless communication is, its path to practical deployment and impact leads through the gates of spectrum regulation and allocation. The rules for spectrum use and allocation can be seen as “axioms” based on which the wireless systems operate. These axioms are changing over a very long time frame, but those changes are tectonic and can change the wireless communication systems in a revolutionary way. This is why we have placed “new spectrum models” in the revolutionary part of this article.

Since the first inceptions of mobile and wireless broadband technologies (e.g., Wi-Fi), spectrum allocation for mobile and local areas services has been characterized by a dichotomy. On the one side nation-wide exclusive spectrum allocations and on the other side unlicensed spectrum. In the last years, the world has started to move beyond this dichotomy. Local spectrum licenses have provided a bridge between these two worlds, effectively allowing anyone with a local spectrum license to deploy a mobile service in a specific location, using a standardized (4G or 5G) or proprietary technology.

At the same time, and related to the local licenses, in the last years different forms of spectrum sharing have been proposed and to a certain extent implemented for mobile and wireless broadband services. That includes, for example, sharing on a geographic basis (e.g., for the 3.8-4.2 GHz local licenses in the UK, where local mobile services coexist with satellite and fixed link services) and on a vertical basis (like between tier 3 and 2 in the Citizens Broadband Radio System (CBRS) in the USA, where users have different spectrum rights).

While these are certainly important first steps, there is still a lot to do to achieve a truly dynamic shared use of spectrum. Trends like radio virtualization and agile RF, cloudification, ML/AI could bring a new paradigm in spectrum sharing [13].

Quantum computing and communication

Communication engineering will likely not stay “classical” and detached from the developments in quantum computing and communication. Although its most prominent role in communication is related to cryptography and security, communication engineers may find innovative ways to make steps towards the quantum internet and its integration with the classical internet. An inspiring reading in this direction is [14] which discusses how Shannon’s theory of information and information is supplemented by quantum computing and merging neuron-inspired biology with information. One way to think about quantum communication devices is as building blocks that will be domesticated in communication engineering. Think of the way the transistors have transformed electronics—most of the people that have designed ingenious circuits based on transistors were not experts in the quantum effects that drive transistor’s operation, but treated it as a black box with certain input/output behavior. It will be interesting to see how the fascinating and counter-intuitive quantum effects will affect the way we build communication architectures and protocols.

Author Profiles

Petar Popovski (F’16) is a Professor at Aalborg University, where he is heading the section on Connectivity. He received his Dipl. Ing and M. Sc. degrees in communication engineering from the University of Sts. Cyril and Methodius in Skopje and the Ph.D. degree from Aalborg University in 2005. His research interests are in the area of wireless communication, communication theory and Internet of Things. In 2020 he published the book “Wireless Connectivity: An Intuitive and Fundamental Guide”. More details here [https://www.comsoc.org/petar-popovski]

Emil Björnson (SM’17) is an Associate Professor at Linköping University. He received the M.S. degree in engineering mathematics from Lund University, Sweden, in 2007, and the Ph.D. degree in telecommunications from the KTH Royal Institute of Technology, Sweden, in 2011. He has authored the textbooks “Optimal Resource Allocation in Coordinated Multi-Cell Systems” (2013) and “Massive MIMO Networks: Spectral, Energy, and Hardware Efficiency” (2017). He is dedicated to reproducible research and has made a large amount of simulation code publicly available. He performs research on MIMO communications, radio resource allocation, machine learning for communications, and energy efficiency. More details here [https://liu.se/en/employee/emibj29]

Federico Boccardi is a Principal Technology Advisor in the Ofcom Technology Group, where he leads the emerging technology team. He received a M.Sc. and a Ph.D. degree in Telecommunication Engineering from the University of Padova, Italy, in 2002 and 2007 respectively, and a master in Strategy and Innovation from the Oxford Saïd Business School in 2014. Before joining Ofcom, he was with Bell Labs (Alcatel-Lucent) from 2006 to 2013 and with Vodafone R&D in 2014. He is a Visiting Professor at the University of Bristol and a Fellow of the IET.

References

[1] P. Popovski, “Five Big Questions for Communication Engineering for the Next Decade”, January 2020.

[2] E. Björnson, “Revitalizing the Research on Wireless Communications in a New Decade”, January 2020.

[3] P. Popovski, C. Stefanovic, J. J. Nielsen, E. de Carvalho, M. Angjelichinoski, K. F. Trillingsgaard, and A.-S. Bana, “Wireless Access in Ultra-Reliable Low-Latency Communication (URLLC),” IEEE Transactions on Communications, vol. 67, no. 8, pp. 5783-5801, Aug. 2019.

[4] O. Simeone, “A Very Brief Introduction to Machine Learning With Applications to Communication Systems,” in IEEE Transactions on Cognitive Communications and Networking, vol. 4, no. 4, pp. 648-664, Dec. 2018.

[5] M. Angjelichinoski, K. F. Trillingsgaard and P. Popovski, “A Statistical Learning Approach to Ultra-Reliable Low Latency Communication,” in IEEE Transactions on Communications, vol. 67, no. 7, pp. 5153-5166, July 2019.

[6] E. Björnson, L. Sanguinetti, H. Wymeersch, J. Hoydis, T. L. Marzetta, “Massive MIMO is a Reality – What is Next? Five Promising Research Directions for Antenna Arrays,” Digital Signal Processing, vol. 94, pp. 3-20, November 2019.

[7] G. Interdonato, E. Björnson, H. Q. Ngo, P. Frenger, E. G. Larsson, “Ubiquitous Cell-Free Massive MIMO Communications,” EURASIP Journal on Wireless Communications and Networking, vol. 2019, no. 197, 2019.

[8] H. Q. Ngo, A. Ashikhmin, H. Yang, E. G. Larsson, and T. L. Marzetta, “Cell-free Massive MIMO versus small cells,” IEEE Transactions of Wireless Communications, vol. 16, no. 3, pp. 1834-1850, 2017.

[9] M. D. Renzo, A. Zappone, M. Debbah, M.-S. Alouini, C. Yuen, J. de Rosny, and S. Tretyakov, “Smart radio environments empowered by reconfigurable intelligent surfaces: How it works, state of research, and road ahead,” arXiv, abs/2004.09352v1, 2020.

[10] E. Björnson, Ö. Özdogan, E. G. Larsson, “Reconfigurable Intelligent Surfaces: Three Myths and Two Critical Questions,” arXiv, abs/2006.03377v1, 2020.

[11] C. Huang, S. Hu, G. C. Alexandropoulos, A. Zappone, C. Yuen, R. Zhang, M. Di Renzo, M. Debbah, “Holographic MIMO Surfaces for 6G Wireless Networks: Opportunities, Challenges, and Trends,”IEEE Wireless Communications Magazine, to appear.

[12] P. Danzi, A. E. Kalør, R. B. Sørensen, A. K. Hagelskjær, L. D. Nguyen, C. Stefanovic, and P. Popovski, “Communication Aspects of the Integration of Wireless IoT Devices with Distributed Ledger Technology,” IEEE Network, vol. 34, no. 1, pp. 47-53, Jan./Feb. 2020.

[13] DARPA Spectrum Collaboration Challenge (SC2), https://www.darpa.mil/program/spectrum-collaboration-challenge

[14] D. Gil and W. M. J. Green, “The Future of Computing: Bits + Neurons + Qubits”, 2019, available at https://arxiv.org/abs/1911.08446